Streaming AWS Lambda with Node.js

AWS Lambda has a relatively new feature: Response streaming. This allows you to begin sending the response before the complete response is computed. How does it work? Does it work? This is what I explore in this post.

In my last post, I have written about setting up AWS Lambda with a function-url. Now let’s explore the inner parts of Lambda a little bit. You may remember this hello-world code from last time.

export const handler = async (event, context) => {

return {

statusCode: 200,

headers: {

"Content-Type": "text/plain",

},

body: "Hello world!",

};

};What if we have some larger computation going on. In our spiel.digital project,

we used sharp to compose images for a game

field from multiple layers. Here is a

(german) talk

about that. I also wanted to use sharp to generate placeholder images in my

placeholder-generator. The nice thing about

sharp is, that it can provide a Node.js stream as output. So I thought: “Why not

use that new feature?”

Lambda Logging

For the following explanations it will be useful to look print debugging messages from the Lambda. But currently, we do not log anything. We should have already done this earlier, so let’s do it now:

data "aws_iam_policy_document" "lambda_logging" {

statement {

effect = "Allow"

actions = [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents",

]

resources = ["arn:aws:logs:*:*:*"]

}

}

resource "aws_iam_policy" "lambda_logging" {

name = "test_lambda_logging"

path = "/"

description = "IAM policy for logging from a lambda"

policy = data.aws_iam_policy_document.lambda_logging.json

}

resource "aws_iam_role_policy_attachment" "lambda_logs" {

role = aws_iam_role.iam_for_test_lambda.name

policy_arn = aws_iam_policy.lambda_logging.arn

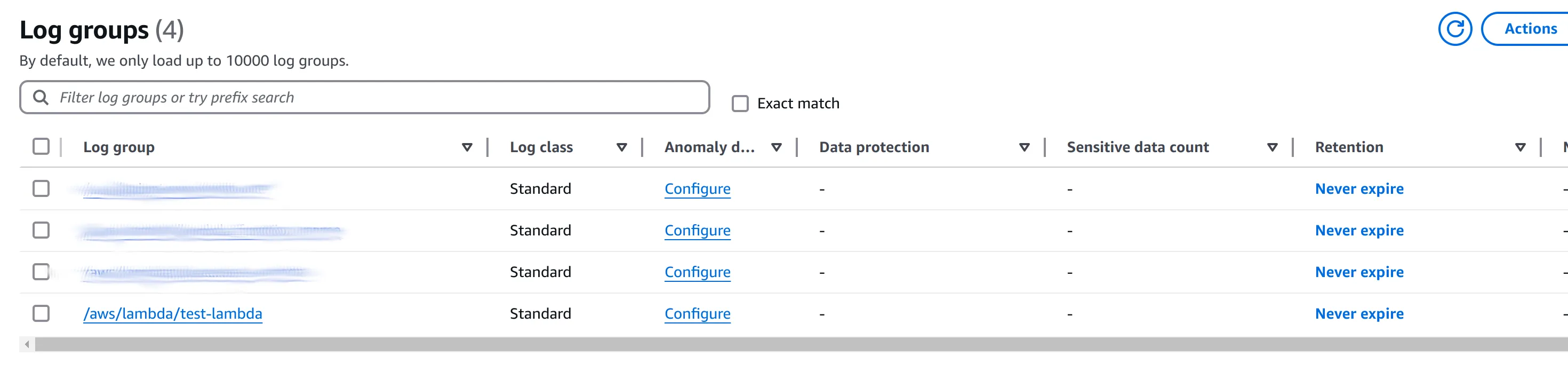

}Now, when we open CloudWatch in the browser and navigate to “Log Groups” on the left, we can see the logs for our lambda function.

Basic setup

First of all, we need to configure the Lambda for response streaming. This configuration is part of the function URL resource, so we modify that part of the terraform file to

resource "aws_lambda_function_url" "main_lambda_url" {

function_name = aws_lambda_function.main_lambda.function_name

authorization_type = "NONE"

# This will configure the lambda to stream the response

invoke_mode = "RESPONSE_STREAM"

}Now we can modify our lambda function to stream the response. Here is a simple example:

export const handler = awslambda.streamifyResponse(

async (event, responseStream, context) => {

responseStream.setContentType("text/plain");

responseStream.write("Hello");

setTimeout(() => {

responseStream.end("World");

}, 1000);

},

);The response stream seems to be a

Node.js Writable with

an additional setContentType method.

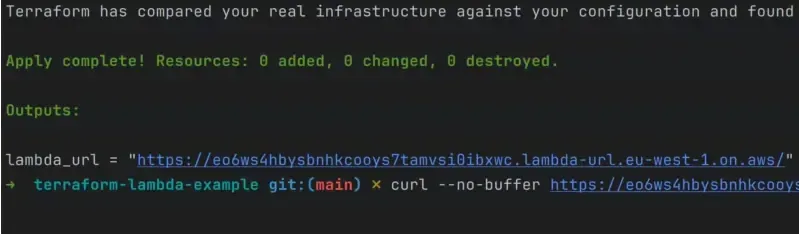

After running terraform apply we can fetch the lambda URL with curl. I use the

--no-buffer option to see the streaming in action, and this is this result:

So, everything it fine! Let’s use it!

I would have liked that…

This specific function is useful to demonstrate the streaming feature, but AWS

will you 1 seconds of Lambda time, even if the functions does nothing in that

time. I reduced to setTimeout to 100ms to consume less of my free tier.

TypeScript and testing

Currently, we are using JavaScript for our Lambda function. But I prefer

TypeScript, which requires some kind of type definitions. Those are usually

provided in the package @types/aws-lambda, but it does not provide types for

the awslambda global. Writing tests is also difficult, because the

responseStream is tied to the Lambda environment and not available in

unit-tests.

There is a package that solves these problems:

lambda-stream exports

streamifyResponse function that wraps awslambda.streamifyResponse or

provides a custom implementation for non-lambda environments.

It also provides TypeScript types for the streamifyResponse function and for

the ResponseStream object.

The function now looks like this:

export const handler = streamifyResponse(

async (event, responseStream, context) => {

responseStream.setContentType("text/plain");

responseStream.write("Hello");

setTimeout(() => {

responseStream.end("World");

}, 1000);

},

);We also need to configure TypeScript and install @types/node, but then we get

type-checks and code completion.

Setting status code and headers

One thing that is important for my placeholder project are response-headers,

specifically the Cache-Control header. I couldn’t find any official

documentation about this. Only a code-example in lambda-stream documentation

and

this blog post

by Jeremy Daly.

I find it a bit confusing, but apparently, we can wrap the responseStream with

another one, providing metadata at the same time:

export const handler = streamifyResponse(

async (event, responseStream, context) => {

responseStream = awslambda.HttpResponseStream.from(responseStream, {

statusCode: 200,

headers: {

"Content-Type": "text/plain",

"Cache-Control": "no-cache",

},

});

responseStream.write("Hello");

setTimeout(() => {

responseStream.end("World");

}, 100);

},

);However, this will show TypeScript errors, because awslambda has no type

definitions. We can fix this by providing our own type definitions in a

d.ts-file.

import type { ResponseStream } from "lambda-stream";

interface ResponseStreamOptions {

statusCode: number;

headers: Record<string, string>;

}

declare global {

const awslambda: {

HttpResponseStream: {

from(

responseStream: ResponseStream,

options: ResponseStreamOptions,

): ResponseStream;

};

};

}Caveats

I discovered some caveats that I think I should already share in this one. For some reason, the following code does not work.

export const handler = streamifyResponse(

async (event, responseStream, context) => {

responseStream = awslambda.HttpResponseStream.from(responseStream, {

statusCode: 200,

headers: {

"Content-Type": "text/plain",

"Cache-Control": "no-cache",

},

});

responseStream.end("World");

},

);In the Node.js world, just calling .end("World") of the stream instead of

.write("World") and then .end() should be fine, and it works when clicking

“Test” in the AWS Console. But it returns an Internal Server Error when

calling the URL with curl. However, if I follow the example in the AWS

documentation and do not replace responseStream with a new stream, it also

works.

export const handler = streamifyResponse(

async (event, responseStream, context) => {

responseStream.setContentType("text/plain");

responseStream.end("World");

},

);But like that, we cannot set the headers. I don’t know exactly where the bug is.

It happens even if I don’t use the lambda-stream package.

Conclusion

I have tested all the examples in this post manually, and it is nice to see that response streaming is working. In fact, when I tried this for the first time, it did not work for me. I even planned to write the blog post about the failure.

This is mostly due to the lack of official documentation to which I count the

lack of type definitions. It would really be nice if someone could open a PR at

https://github.com/DefinitelyTyped/DefinitelyTyped to update the types for

@types/aws-lambda package. That and a polyfill would make the lambda-stream

package obsolete. The author of the package states in the README that he would

appreciate that.

I think that the streaming feature is worth using despite the lack of documentation. It can improve user experience and save you some money, by speeding up your page. For the time, we can use the workarounds that I describe in this post, and hope for a better future…

The setup for this post can be found in the branch 0029-lambda-streaming of the example project